Differential expression¶

- A gene or transcript is declared differentially expressed if an observed change in read counts between two experimental conditions is statistically significant, i.e. if the difference is greater than what would be expected just due to random variation.

- Detecting differentially expressed genes/transcripts across sample groups is among the major goals in the statistical analysis of RNA-seq data.

df.head(10)

| sample_01 | sample_02 | sample_03 | sample_04 | sample_05 | sample_06 | sample_07 | sample_08 | sample_09 | sample_10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| AAR2 | 1114 | 506 | 1320 | 580 | 1415 | 962 | 1580 | 1543 | 343 | 440 |

| ABALON | 2185 | 1002 | 3097 | 1157 | 3323 | 2561 | 2284 | 3396 | 642 | 945 |

| ABBA01031660.1 | 542 | 232 | 750 | 378 | 832 | 615 | 720 | 866 | 138 | 236 |

| ABBA01031661.1 | 925 | 428 | 1142 | 488 | 1192 | 1088 | 1153 | 1454 | 360 | 369 |

| ABBA01031663.1 | 635 | 249 | 697 | 329 | 1182 | 499 | 769 | 953 | 169 | 238 |

| ABBA01031674.1 | 600 | 225 | 711 | 324 | 763 | 590 | 645 | 888 | 169 | 230 |

| ABHD12 | 2048 | 901 | 2467 | 1132 | 2819 | 2057 | 2294 | 2977 | 589 | 818 |

| ABHD16B | 2184 | 970 | 2964 | 1270 | 3148 | 2138 | 2796 | 3507 | 731 | 1045 |

| AC005220.1 | 2101 | 749 | 2459 | 1140 | 2518 | 1806 | 2111 | 2650 | 583 | 607 |

| AC005808.1 | 216 | 134 | 542 | 158 | 310 | 228 | 324 | 439 | 77 | 95 |

Between-sample normalization¶

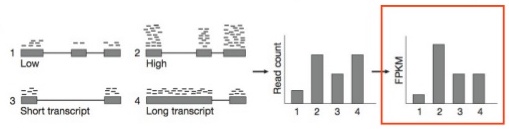

- The general point of between-sample normalization (BSN) is to be able to compare expression features (genes, transcripts) ACROSS experiments. This is not to be confused with within-sample normalization methods (comparing different features within a single sample).

- Most BSN methods address two issues :

- Variable sequencing depth (the total number of reads sequenced) between experiments.

- Finding a “control set” of expression features which should have relatively similar expression patterns across experiments (e.g. genes that are not differentially expressed) to serve as a baseline.

Harold Pimentel: In RNA-Seq, 2 != 2: Between-sample normalization

Harold Pimentel: In RNA-Seq, 2 != 2: Between-sample normalization

- One way to do the total count normalization would be to divide by the total counts :

| Gene | Control Counts | Treatment Counts | Control Normalized | Treatment Normalized |

|---|---|---|---|---|

| G1 | 2.00 | 6.00 | 0.20 | 0.06 |

| G2 | 2.00 | 6.00 | 0.20 | 0.06 |

| G3 | 2.00 | 6.00 | 0.20 | 0.06 |

| G4 | 2.00 | 6.00 | 0.20 | 0.06 |

| FG | 2.00 | 76.00 | 0.20 | 0.76 |

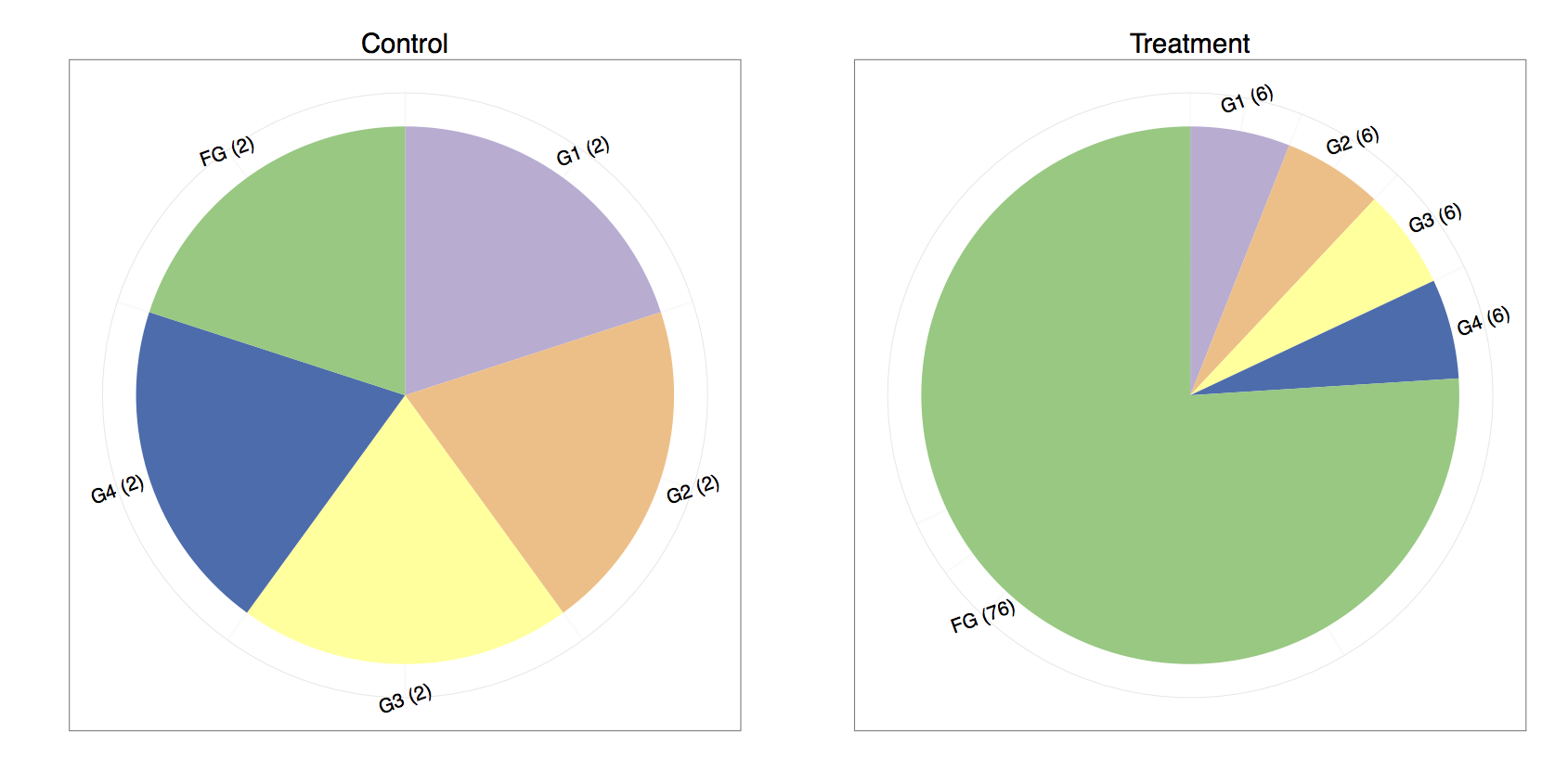

- If one compares the control and treatment proportions, it seems that every gene is differentially expressed. We are typically under the assumption that the majority of the genes do not change in expression. Under that assumption, it is much more plausible that genes one through four remain equally expressed, whereas “funky gene” (FG) is the only differentially expressed gene.

- We might consider normalizing by the sum of the total counts while omitting the last gene since its highly overexpressed and is throwing off the normalization :

| Gene | Control Counts | Treatment Counts | Control Normalized | Treatment Normalized |

|---|---|---|---|---|

| G1 | 2.00 | 6.00 | 0.25 | 0.25 |

| G2 | 2.00 | 6.00 | 0.25 | 0.25 |

| G3 | 2.00 | 6.00 | 0.25 | 0.25 |

| G4 | 2.00 | 6.00 | 0.25 | 0.25 |

| FG | 2.00 | 76.00 | 0.25 | 3.17 |

- Between sample normalization procedures estimate sample-specific normalization factors that are used to rescale the observed counts.

- Using these normalization methods, the sum of the normalized counts across all genes are therefore not necessarily equal between samples (as it would be if only the library sizes were used for normalization), but the goal is instead to make the normalized counts for non-differentially expressed genes similar between the samples.

- Popular examples of these normalization procedures are TMM (trimmed mean of M-values) and DESeq. These two methods perform similarly and are both based on an assumption that most genes are equivalently expressed in the samples, and that the differentially expressed genes are divided more or less equally between up- and downregulation.

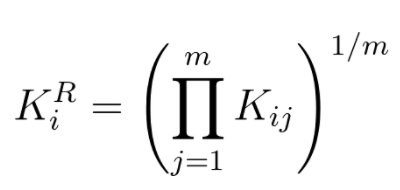

- With DESeq sample-specific normalization constants are estimated with the median-of-ratios method:

- Let's try estimating sample specific normalization factor for the two dummy samples from the example. We first calculate the geometric means for each gene:

| Gene | Control Counts | Treatment Counts | KiR |

|---|---|---|---|

| G1 | 2.00 | 6.00 | 3.464 |

| G2 | 2.00 | 6.00 | 3.464 |

| G3 | 2.00 | 6.00 | 3.464 |

| G4 | 2.00 | 6.00 | 3.464 |

| FG | 2.00 | 76.00 | 12.329 |

- Next we divide each count with the geometric mean expression for that gene:

| Gene | Control Counts/KiR | Treatment Counts/KiR |

|---|---|---|

| G1 | 0.57735 | 1.73205 |

| G2 | 0.57735 | 1.73205 |

| G3 | 0.57735 | 1.73205 |

| G4 | 0.57735 | 1.73205 |

| FG | 0.16222 | 6.16441 |

- It is obvious that sample specific normalization factors are 0.57735 and 1.73205 (sample medians).

- Finally we divide each sample by its normalization factor:

| Gene | Control Normalized | Treatment Normalized |

|---|---|---|

| G1 | 3.4641 | 3.4641 |

| G2 | 3.4641 | 3.4641 |

| G3 | 3.4641 | 3.4641 |

| G4 | 3.4641 | 3.4641 |

| FG | 3.4641 | 43.87864 |

Normalized, this is how our simulated dataset looks like:

normdf.head(10)

| sample_01 | sample_02 | sample_03 | sample_04 | sample_05 | sample_06 | sample_07 | sample_08 | sample_09 | sample_10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| AAR2 | 876.405542 | 918.567371 | 817.491511 | 797.269235 | 805.068272 | 766.348379 | 1104.531722 | 820.321403 | 853.134999 | 843.576787 |

| ABALON | 1718.982145 | 1818.981236 | 1918.008492 | 1590.414664 | 1890.630296 | 2040.143658 | 1596.677503 | 1805.451382 | 1596.829941 | 1811.772872 |

| ABBA01031660.1 | 426.401978 | 421.161324 | 464.483813 | 519.599605 | 473.368765 | 489.921261 | 503.330911 | 460.400735 | 343.243819 | 452.463913 |

| ABBA01031661.1 | 727.715553 | 776.970029 | 707.254019 | 670.805839 | 678.191788 | 866.722491 | 806.028529 | 773.005392 | 895.418658 | 707.454169 |

| ABBA01031663.1 | 499.566893 | 452.022283 | 431.660290 | 452.244101 | 672.502260 | 397.513349 | 537.585376 | 506.653465 | 420.349315 | 456.298353 |

| ABBA01031674.1 | 472.031710 | 408.453870 | 440.330655 | 445.371090 | 434.111019 | 470.005763 | 450.900608 | 472.096828 | 420.349315 | 440.960593 |

| ABHD12 | 1611.201571 | 1635.630832 | 1527.842089 | 1556.049611 | 1603.878063 | 1638.647210 | 1603.668210 | 1582.693983 | 1465.004416 | 1568.285935 |

| ABHD16B | 1718.195425 | 1760.890019 | 1835.640029 | 1745.744705 | 1791.063549 | 1703.173425 | 1954.601706 | 1864.463486 | 1818.197331 | 2003.494869 |

| AC005220.1 | 1652.897705 | 1359.697551 | 1522.887595 | 1567.046428 | 1432.623258 | 1438.695606 | 1475.738270 | 1408.847516 | 1450.080772 | 1163.752522 |

| AC005808.1 | 169.931416 | 243.256972 | 335.666969 | 217.187136 | 176.375381 | 181.629346 | 226.498910 | 233.390211 | 191.520102 | 182.135897 |

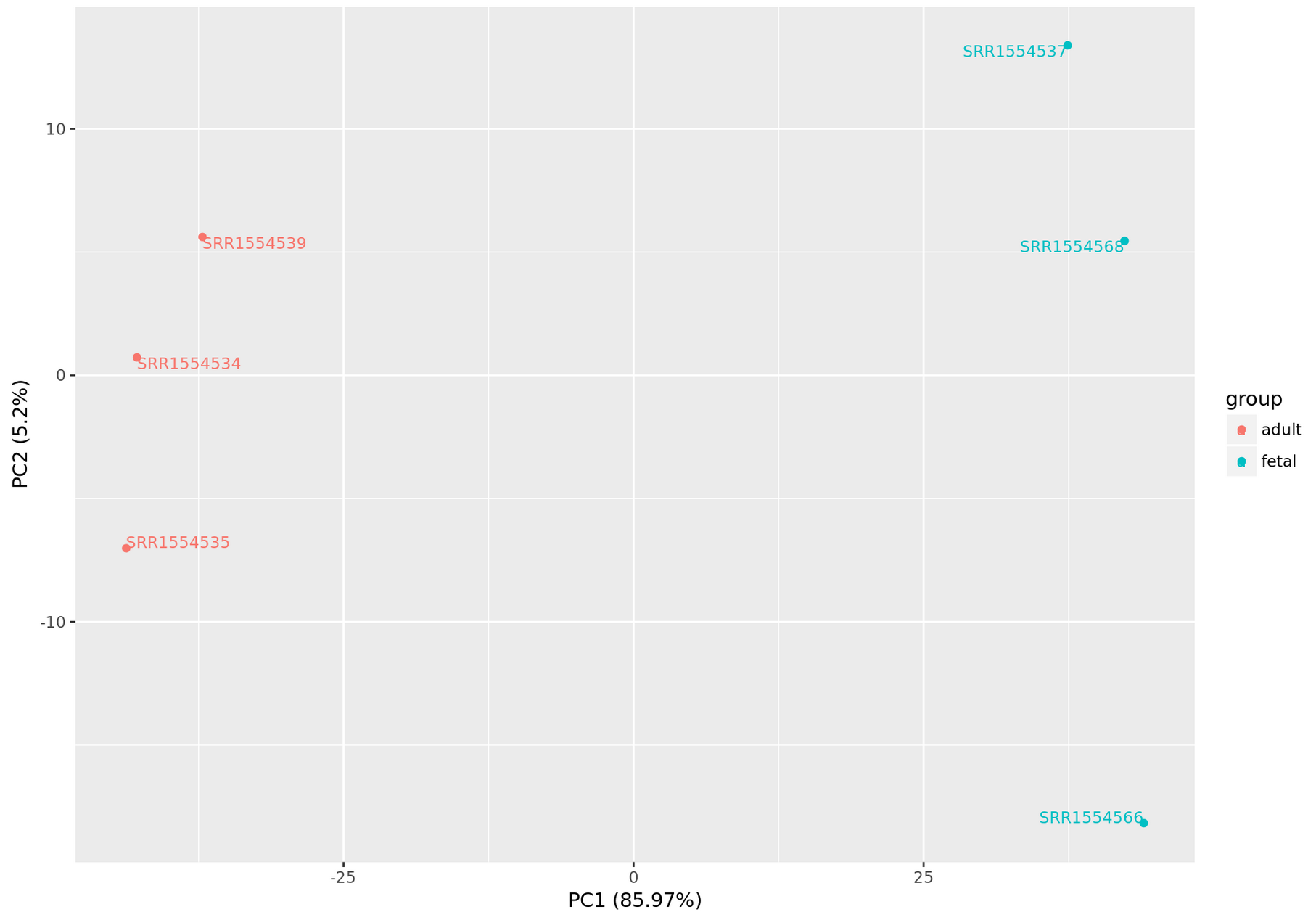

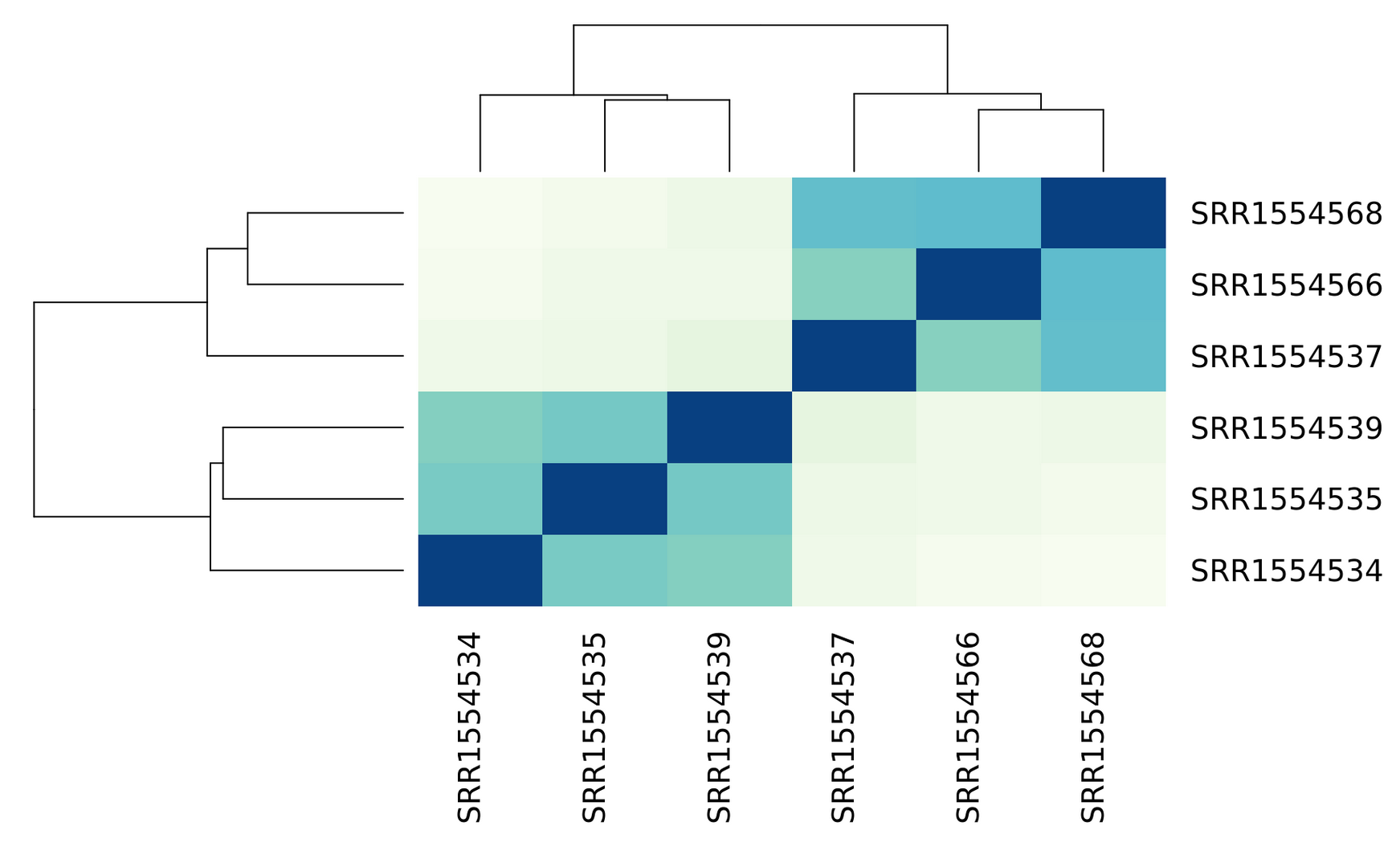

Exploratory analysis¶

Data quality assessment and quality control (i.e. the removal of insufficiently good data) are essential steps of any data analysis. These steps should typically be performed very early in the analysis of a new data set, preceding or in parallel to the differential expression testing.

The dataset we are investigating is simulated, but let us pretend that samples 6 through 10 are coming from the "treated" population and that samples 1 through 5 are "untreated" or "control" samples.

from sklearn.decomposition import PCA

from sklearn.preprocessing import StandardScaler

pca = PCA(n_components=2)

principalComponents = pca.fit_transform(normdf.T)

principalComponents

array([[ 7253.66901946, -1133.0858518 ],

[ 6777.83482879, -351.8259295 ],

[ 6777.5841612 , 1550.29503569],

[ 6891.70586134, -334.63078674],

[ 7100.44333788, 351.9640596 ],

[-7020.20823953, 2833.66966978],

[-7027.03427084, -2662.13331895],

[-7052.22861383, 957.48901253],

[-6578.86674374, -1450.58626455],

[-7122.89934072, 238.84437394]])

principalDf = pd.DataFrame(data = principalComponents

, columns = ['principal component 1', 'principal component 2'])

categories = np.array(["untreated", "treated"])

finalDf = pd.concat([principalDf, pd.DataFrame(np.repeat(categories, [5, 5], axis=0), columns=['condition'])], axis = 1)

finalDf

| principal component 1 | principal component 2 | condition | |

|---|---|---|---|

| 0 | 7253.669019 | -1133.085852 | untreated |

| 1 | 6777.834829 | -351.825929 | untreated |

| 2 | 6777.584161 | 1550.295036 | untreated |

| 3 | 6891.705861 | -334.630787 | untreated |

| 4 | 7100.443338 | 351.964060 | untreated |

| 5 | -7020.208240 | 2833.669670 | treated |

| 6 | -7027.034271 | -2662.133319 | treated |

| 7 | -7052.228614 | 957.489013 | treated |

| 8 | -6578.866744 | -1450.586265 | treated |

| 9 | -7122.899341 | 238.844374 | treated |

fig = plt.figure(figsize = (8,6))

ax = fig.add_subplot(1,1,1)

ax.set_xlabel('Principal Component 1', fontsize = 15)

ax.set_ylabel('Principal Component 2', fontsize = 15)

ax.set_title('2 Component PCA', fontsize = 20)

conditions = categories; colors = ['r', 'g']

for target, color in zip(conditions,colors):

indicesToKeep = finalDf['condition'] == target

ax.scatter(finalDf.loc[indicesToKeep, 'principal component 1'],

finalDf.loc[indicesToKeep, 'principal component 2'],

c = color, s = 50)

ax.legend(conditions); ax.grid()

Intro to statistical inference¶

For each gene, we are interested in differences in mean expression between the two sample groups.

import seaborn as sns

aux = {"norm" : normdf.loc['AL031055.1'], "group" : np.repeat(categories, [5, 5], axis=0)}

df = pd.DataFrame(data=aux)

sns.stripplot(x="group", y="norm", data=df, jitter=True).set_title('AL031055.1')

Text(0.5,1,'AL031055.1')

aux = {"norm" : normdf.loc['AL023803.2'], "group" : np.repeat(categories, [5, 5], axis=0)}

df = pd.DataFrame(data=aux)

sns.stripplot(x="group", y="norm", data=df, jitter=True).set_title('AL023803.2')

Text(0.5,1,'AL023803.2')

sns.boxplot(x="group", y="norm", data=df).set_title('AL023803.2')

Text(0.5,1,'AL023803.2')

cMean = normdf.loc['AL023803.2'][0:5].mean()

tMean = normdf.loc['AL023803.2'][5:10].mean()

print(cMean)

print(tMean)

354.8722544056803 402.20746388364734

diff = tMean - cMean

diff

47.335209477967055

So the expression level for AL023803.2 in treated samples is about 10% higher. But these averages are random variables. They can take many values.

If we repeat the experiment, by sequencing samples from a new batch of patients (or even resequencing the same ones) we get different means. Every time we repeat this experiment, we get a different value of mean difference.

When comparing measured values we need to be skeptical. How do we know that this difference is due to the treatment? What happens if compare two sets of untreated samples? Will we see a difference this big? Statisticians refer to this scenario as the null hypothesis. The name “null” is used to remind us that we are acting as skeptics: we give credence to the possibility that there is no difference.

So what do we do?

Because we do not have access to entire populations of untreated and treated samples, we cannot confidently say just by comparing the mean expression values that a gene is differentialy expressed.

We can perform a simple permutation test. Permutation tests take advantage of the fact that if we randomly shuffle the treated and untreated labels for the 10 measurements that we have - then the null is true. So we shuffle the labels, calculate the mean difference and assume that the ensuing distribution approximates the null distribution. Here is how we generate a null distribution by shuffling the data 1,000 times:

normexp = normdf.loc['AL023803.2']

np.random.seed(6)

means =[]

for i in range(1000) :

aux = np.random.permutation(normexp)

cmean = aux[0:5].mean()

tmean = aux[5:10].mean()

means.append(tmean-cmean)

plt.hist(means, bins = 20)

plt.axvline(diff, color='k', linestyle='dashed', linewidth=2)

plt.axvline(-diff, color='k', linestyle='dashed', linewidth=2)

plt.show()

absmeans = [abs(x) for x in means]

absdiff = abs(diff)

p = sum(absmeans > absdiff)/len(absmeans)

p

0.135

- Null hypothesis: there is no significant difference between specified populations, any observed difference being due to sampling or experimental error.

- The p-value is defined as the probability of obtaining a result equal to or "more extreme" than what was actually observed, when the null hypothesis is true.

- The alternative hypothesis is considered true if the statistic observed would be an unlikely realization of the null hypothesis according to the p-value.

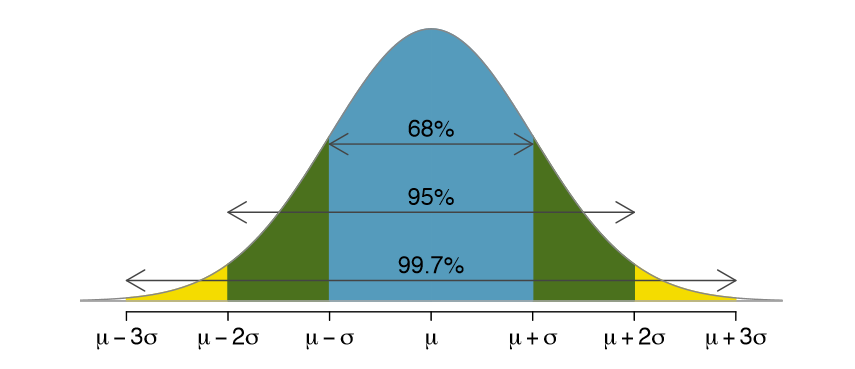

Central Limit Theorem¶

CLT: When the sample size is large, the average $\bar{Y}$ of a random sample follows a normal distribution centered at the population average $\mu_{Y}$ and with standard deviation equal to the population standard deviation $\sigma_{Y}$, divided by the square root of the sample size $N$.

We refer to the standard deviation of the distribution of a random variable as the random variable’s standard error.

https://rajeshrinet.github.io/blog/2014/central-limit-theorem/

Population mean and variance (deviation squred) are parameters defined as:

$$\mu_{Y} = \frac{1}{n}\sum_{i=1}^{n}y_{i}$$ $$\sigma_{Y}^2 = \frac{1}{n}\sum_{i=1}^{n}(y_{i}-\mu_{Y})^{2}$$

where $n$ is the number of elements in population.

Normal distribution $N(\mu, \sigma)$ is defined with the density function:

$$f(x)=\frac{1}{\sqrt{2\pi\sigma^{2}}}e^{-\frac{\left (x-\mu_{X} \right )^{2}}{2\sigma^{2}}}$$

If we subtract a constant from a random variable, the mean of the new random variable shifts by that constant. Mathematically, if $X$ is a random variable with mean $\mu$ and $a$ is a constant, the mean of $X − a$ is $\mu − a$. A similarly intuitive result holds for multiplication and the standard deviation (SD). If $X$ is a random variable with mean $\mu$ and SD $\sigma$, and $a$ is a constant, then the mean and SD of $aX$ are $a\mu$ and $| a | \sigma$ respectively.

Knowing this, the CLT implies that if we take many samples of size $N$, then the quantity:

$$\frac{\bar{Y}-\mu_{Y}}{\sigma_{Y}/\sqrt{N}}$$

is approximated with a normal distribution centered at 0 and with standard deviation 1.

If $X$ and $Y$ are two random variables independent of each other, then the variance of $X + Y$ is the sum of the variances $\sigma_{X}^{2} + \sigma_{Y}^{2}$. The sum (or the difference) of normal variables is again normal. Under the null hypothesis (when the mean difference is 0), the ratio:

$$\frac{\bar{Y}-\bar{X}}{\sqrt{\frac{\sigma_{Y}^{2}}{N}+\frac{\sigma_{X}^{2}}{M}}}$$

is approximated by a normal distribution centered at 0 and standard deviation 1. Using this approximation makes computing p-values simple because we know the proportion of the distribution under any value. For example, only 5% of these values are larger than 2 (in absolute value).

However, we can’t claim victory just yet because we don’t know the population standard deviations: $\sigma_{X}$ and $\sigma_{Y}$. These are unknown population parameters, but we can get around this by using the sample standard deviations, call them $s_{X}$ and $s_{Y}$. These are defined as:

$$s_{X}^2 = \frac{1}{M-1}\sum_{i=1}^{M}(X_{i}-\bar{X})^{2}$$ $$s_{Y}^2 = \frac{1}{N-1}\sum_{i=1}^{N}(Y_{i}-\bar{Y})^{2}$$

$M − 1$, $N − 1$: the degrees of freedom.

So we can redefine our ratio as:

$$\frac{\bar{Y}-\bar{X}}{\sqrt{\frac{s_{Y}^{2}}{N}+\frac{s_{X}^{2}}{M}}}$$

The CLT tells us that when $M$ and $N$ are large, this random variable is normally distributed with mean 0 and SD 1.

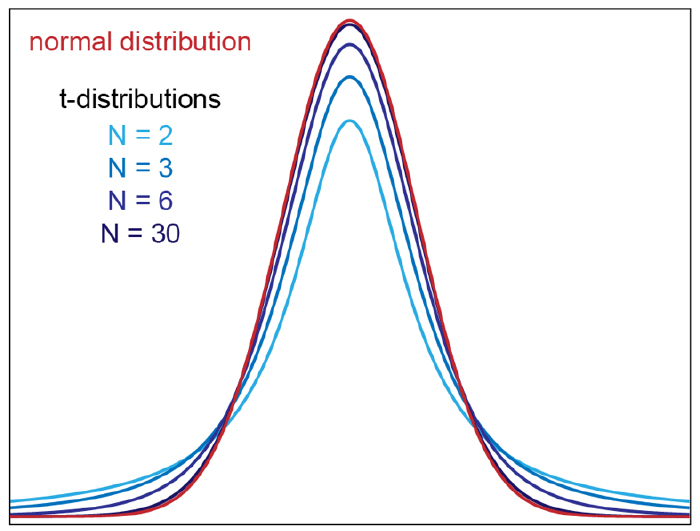

The t-distribution¶

The CLT relies on large samples, what we refer to as asymptotic results. When the CLT does not apply, there is another option that does not rely on asymptotic results. When the original population from which a random variable, say $Y$, is sampled is normally distributed with mean 0, then we can calculate the distribution of:

$$\sqrt{N}\frac{\bar{Y}-0}{\sigma_{Y}}$$

This is the ratio of two random variables so it is not necessarily normal. The fact that the denominator can be small by chance increases the probability of observing large values. In 1908. William Sealy Gosset, an employee of the Guinness brewing company, deciphered the distribution of this random variable and published a paper under the pseudonym “Student”. The distribution is therefore called Student’s t-distribution.

$$f(t)=\frac{\Gamma(\frac{\nu+1}{2})}{\sqrt{\nu\pi}\Gamma(\frac{\nu}{2})}\left (1+\frac{t^2}{\nu} \right )^{-\frac{\nu+1}{2}}$$

where $\nu$ is the degrees of freedom.

So for small sample sizes we shall be computing that same ratio:

$$\frac{\bar{Y}-\bar{X}}{\sqrt{\frac{s_{Y}^{2}}{N}+\frac{s_{X}^{2}}{M}}}$$

only this time we shall be comparing it to the t-distribution instead of normal. All such tests are usually called Student's t-tests, though strictly speaking that name should only be used if the variances of the two populations are also assumed to be equal; the form of the test used when this assumption is dropped is sometimes called Welch's t-test and that's the one that we'll be implementing.

def welch_test(a, b):

# pandas.Series.var() returns an unbiased variance estimate, normalized by N-1 by default

var_a = a.var()

var_b = b.var()

Na = len(a)

Nb = len(b)

# std deviation estimate

s = np.sqrt(var_a/Na + var_b/Nb)

# calculate the t-statistics

t = (a.mean() - b.mean())/s

# degrees of freedom - Welch–Satterthwaite equation, yeah it's a bit more complicated than Na + Nb - 2

deg = (((var_a)/Na + (var_b)/Nb)**2)/ ((var_a**2)/(Na**2*(Na-1)) + (var_b**2)/(Nb**2*(Nb-1)))

# p-value after comparison with the t

p = 2*stats.t.sf(np.abs(t), deg)

return (p)

p = [(index, welch_test(row.values[0:5], row.values[5:10])) for index, row in normdf.iterrows()]

res = pd.DataFrame(p, columns=['gene', 'pval'])

res = res.set_index('gene')

res.shape

(1347, 1)

res.head(6)

| pval | |

|---|---|

| gene | |

| AAR2 | 0.565876 |

| ABALON | 0.855394 |

| ABBA01031660.1 | 0.720978 |

| ABBA01031661.1 | 0.028195 |

| ABBA01031663.1 | 0.437817 |

| ABBA01031674.1 | 0.414137 |

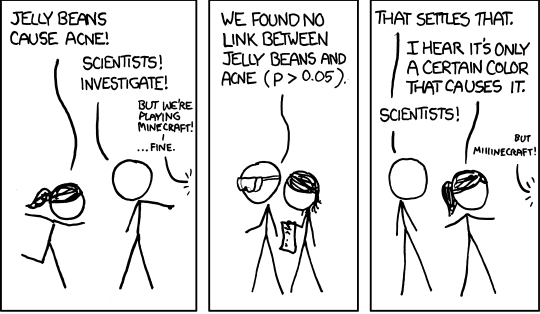

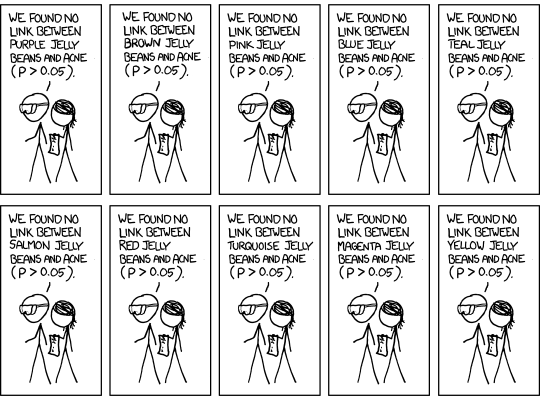

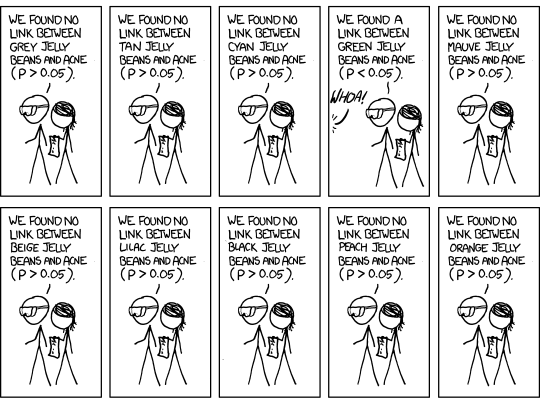

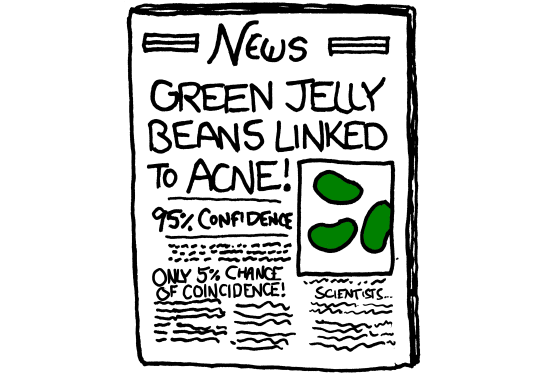

Multiple testing¶

- In genomic studies you don't usually fit just one regression model or calculate just one p-value. You calculate many p-values.

- In our simulated dataset, just from chromosome 20 (which is one of the shortest in human genome) we have 1347 genes.

- If you use the standard cutoff of 0.05 when calling p-value significant, you'll know that since p-values are uniformly distributed when the null is true, about 1 out of every 20 times you'll still call that result statistically significant, even when there is no true biological effect.

np.random.seed(6)

pvals =[]

for i in range(20000) :

firstg = np.random.normal(32, 5, 20)

secondg = np.random.normal(32, 5, 20)

pval = welch_test(firstg, secondg)

pvals.append(pval)

plt.hist(pvals, bins = 20)

plt.show()

res['pval'].plot.hist(bins=20)

<matplotlib.axes._subplots.AxesSubplot at 0x1a1eb558d0>

| Called significant | Not called significant | Total | |

|---|---|---|---|

| Null true | $V$ | $n_{0} - V$ | $n_{0}$ |

| Alternative true | $S$ | $n_{1} - S$ | $n_{1}$ |

| Total | $R$ | $n - R$ | $n$ |

- $V$ represents the number of type I errors (false positives);

- $n_{1} - S$ represents the number of type II errors (false negatives);

- $S$ is the number of true positives and $n_{0} - V$ the number of true negatives.

A multiple testing correction procedure is needed to adjust the statistical confidence measures based on the number of tests performed. Different error rates are defined for this purpose:

- Family wise error rate:

$$FWER = P_{r}\left (V \geq 1 \right ) = 1 - P_{r}\left (V = 0 \right )$$

is the probability of making one or more false discoveries, or type I errors when performing multiple hypotheses tests.

- False discovery rate:

$$FDR=E\left \lfloor \frac{V}{V+S} \right \rfloor$$

is the expected proportion of "discoveries" (rejected null hypotheses) that are false (incorrect rejections).

Suppose 1200 out of 20,000 genes are found significant at 0.05 level (threshold $\alpha = 0.05$).

- No correction: expect 0.05 * 20,000 = 1000 false positives

- False Discovery Rate correction: expect 0.05 * 1200 = 60 false positives

- Family Wise Error Rate correction: expect no false positives (probability of having at least one false positive is ≤ 0.05)

Benjamini-Hochberg correction: the p-values are sorted in increasing order and the i-th p-value is considered significant if it’s less or equal to $\frac{i\alpha}{n}$.

Bonferroni correction: p-values less or equal to α/n are considered significant.

After performing Benjamini-Hochberg (FDR) correction:

res.head(6)

| pval | qval | de | |

|---|---|---|---|

| gene | |||

| FLRT3 | 9.418961e-10 | 0.000001 | True |

| PLCG1-AS1 | 3.296634e-09 | 0.000002 | True |

| RBM39 | 3.591833e-09 | 0.000002 | True |

| TOP1 | 5.383997e-09 | 0.000002 | True |

| RALGAPB | 5.399923e-09 | 0.000001 | True |

| RTFDC1 | 7.302393e-08 | 0.000016 | True |

res['qval'].plot.hist(bins=20)

<matplotlib.axes._subplots.AxesSubplot at 0x1a1e998358>